Tom Herbert, SiPanda CTO, November 3, 2024

One of the fundamental truths in programming is that the tactics and strategies for managing memory is a bellwether of system performance. It's actually pretty simple: if we minimize memory copies and cache misses we get good performance, if we don't we see bad performance! This holds true in networking where we need to process packets in memory at very high rates (we're talking terabits of data per second and billions of packets per second).

Packet data is very often composed of data that is scattered in noncontiguous memory buffers, and when the packet is transmitted if the memory buffers are gathered to form one contiguous packet-- hence, representation of packet data is called scatter-gather. It follows that a lot of the performance of a networking stack is tied to how well we can manage and process scatter-gather data. As we leverage hardware features like Domain Specific Accelerators, the need for a universal metadata structure for scatter-gather data that can be seamlessly shared between software and hardware becomes clear. To that end, we propose Packet Vector Buffers, or pvbufs, as that universal metadata structure!

Where we are today

Fundamentally, scatter-gather data is represented by a list buffer descriptors, where for each buffer there is a pointer and length. There are two common data structures used for this: linked lists and arrays of IO vectors

Linked lists are commonly used in Operating Systems for representing packets with scatter-gather data. mbufs are data structures that are used in various BSD Operating Systems and are available in DPDK libraries. sk_buffs are the metadata packet structure in the Linux kernel. Both mbufs and sk_buffs represent scatter-gather data as a linked list of the respective structures. For example, here's the start of the mbuf structure:

struct mbuf {

struct mbuf *m_next;

struct mbuf *m_nextpkt;

caddr_t m_data;

int m_len;

short m_type;

int m_flags;

/* More fields... */

}m_data is a pointer to a data buffer and m_len is the length of the buffer. mbufs are linked together via m_next to create a linked list of scatter-gather buffers. The rest of the fields in the mbuf are ancillary information that provides other information and interpretation of the buffer or packet. An sk_buff structure has the same basic fields as an mbuf and also contains a bunch of fields for ancillary information.

Linked lists are good for building scatter-lists on the fly, and operations like splitting and merging packets are fairly straightforward. On the other hand, they aren't very space efficient (each data buffer needs a metadata buffer allocated), and walking a long list is expensive. mbufs or sk_buffs are OS specific with many fields that would have no relevance to hardware. Also, they are large structures with sizes often greater than a kilobyte which raises the specter of cache misses and a lot of work in initializing the structures (even zero'ing a data structure has cost). For a hardware-friendly data structure we really want something lighter weight.

An IO vector is a pair of a pointer and length. Often IO vectors are used in an array to represent scatter-gather data. IO vectors are defined in POSIX as an iovec structure:

struct iovec {

void *iov_base; /* Pointer to data */

size_t iov_len; /* Length of data */

}Anyone who's ever programmed a reasonably non-trivial network application will know about iovecs-- for instance, they are used as an argument in sendmsg and recvmsg socket calls for sending and receiving data stored in scatter/gather lists.

Arrays of IO vectors have some nice properties. They are more space efficient than linked lists, for instance we can put five iovecs into one sixty-four byte cacheline so walking the packet data results in fewer cache misses. Arrays of IO Vectors are simpler structures than linked lists and they're OS agnostic which makes them more portable than sk_buffs or mbufs. On the other hand, they're not so useful for building scatter-gather lists on the fly and operations like splitting or merging packets are much harder with IO vectors than with linked lists. As a candidate for a universal data structure for scatter-gather, IO vectors come close, but they're just a bit too inflexible and don't scale for holding large amounts of data.

Packet IO Vectors (pvbufs)

So linked lists and arrays of IO vectors both have their pluses and minusess, but neither one completely fits the bill as a universal scatter-gather metadata structure, So what's the solution then?... How 'bout we create a hybrid to get the best of both worlds? That's Packet IO Vectors, aka pvbufs, is!

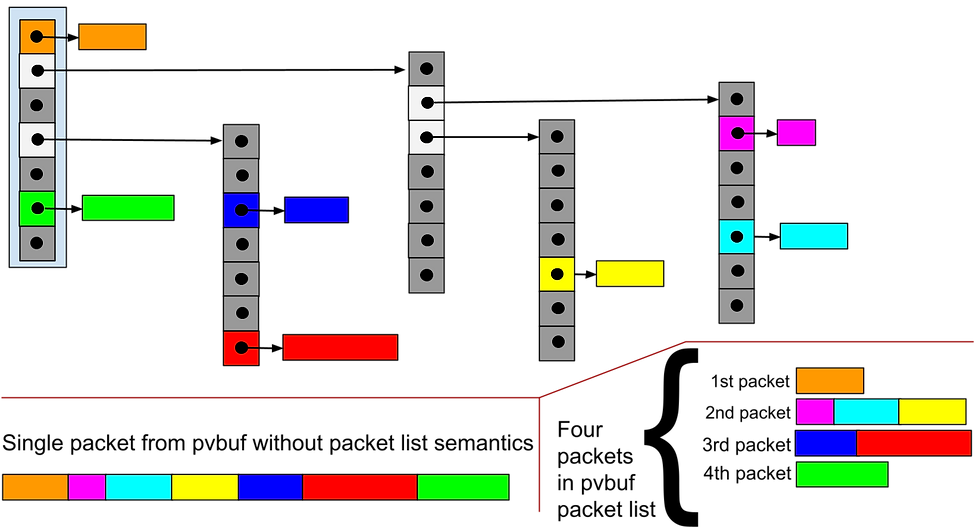

A pvbuf is a data structure that contains an array of IO vectors or pvbuf iovecs. These iovecs look like POSIX iovec structures except with the twist that an pvbuf iovec may point to another pvbuf instead of just pointing to a memory buffer. This allows a scatter/gather list to be represented by a tree of pvbufs like shown below. pvbufs assume the best characteristics of linked lists and arrays of IO vectors-- they are space efficient and allow random access like in IO vectors, and they have the efficiency of operations, such as splicing objects, that linked lists have.

Pvbufs. The top frame shows a pvbuf hierarchy, the lower left frame shows the linearized data, and the lower right shows the packets if the pvbuf is interpreted as a packet list. The colored blocks are memory buffers holding the packet data, the vertical rectangles are pvbufs.

Pvbuf data structures

A "pvbuf iovec" structure is defined as:

struct pvbuf_iovec {

void *iov_base;

__u32 iov_stag: 4;

__u32 iov_btag: 4;

__u32 iov_len: 24;

}This is basically the same structure as a POSIX iovec except that we steal eight bits out the iov_len field. These bits are used as tags to describe alternate interpretations of the data pointed to by iov_base. Note that the iov_len field shrinks to 24 bits which means the maximum buffer length is 16,777,215 bytes-- obviously that's a whole lot less than the full four gigabytes with thirty-two bits, but in practice it should be plenty large enough.

A "pvbuf" structure contains an array of pvbuf iovecs:

struct pvbuf {

struct pvbuf_iovec iovec[];

};When both iov_stag and iov_btag are zero then the iovec is for a plain buffer. This is interchangeable with a POSIX iovec structure as long as the iov_len is less than 16,777,216.

When iov_stag is one then iov_base is a pointer to another pvbuf and iov_btag gives the size of the iovec buffer in the number of cachelines (64 bytes) minus one. The number of iovecs in the array is then computed by:

num_iovecs = ((iov_btag + 1) * 64) / 12

For instance, if for some iovec iov_stag is 1 and iov_btag is 2 then iov_base points to a pvbuf with sixteen iovecs.

When an iovec contains a pvbuf pointer then the length field indicates the length of data in the embedded pvbuf tree. If the length field is zero then this indicates no information about the data length in the pvbuf, else if the length field is non-zero then it is equal to the sum of all the lengths of iovecs in the pvbuf including the lengths of any embedded pvbufs in the iovec hierarchy of the pvbuf.

Fun with pvbufs

pvbufs themselves are quite simple with few inherent semantics. We can extend them to create a rich set of functionalities and interpretations by assuming external and use case specific semantics.

Pvbufs can contain a list of packets by employing a one level depth hierarchy of pvbufs within pvbufs. In this use case, each top level iovec in a pvbuf would correspond to one packet. Packet list pvbufs can be linked by assuming the last iovec is a pointer to an extended packet list. A pvbuf does not contain any indication that it contains a packet list, this semantic is an external characteristic that is inferred from the context that the pvbuf is used. The picture above illustrates interpreting a pvbuf as a packet list.

Pvbufs are simple data structures whose purpose is just to represent data in a scatter/gather list, and so they contain no ancillary information like mbufs and sk_buffs do. Ancillary information can be attached to a pvbuf as a pseudo header that is interpreted in the context that the pvbuf used. For instance, we might arrange that the first buffer in a pvbuf is itself an sk_buff structure where the packet data starts at the second buffer of the pvbuf. When the pvbuf is passed between functions in the kernel, the first buffer is assumed to be an sk_buff structure by convention. When the pvbuf is sent to a device, the first buffer containing the sk_buff is simply removed for the pvbuf (by zero'ing the first iovec).

Hardware and pvbufs

A major goal of pvbufs is that they're "hardware friendly" in that we can pass the whole packet in scatter-gather data as a single pointer (as opposed the current technique of putting each constituent buffer in its own descriptor on a TX/RX ring). When the hardware is processing the data it would first read the top level pvbuf and then process any iovecs. If an iovec points to another pvbuf, the hardware would load that pvbuf into its local memory and process it.

In some more advanced incarnations, we foresee the day when hardware will be able to take an active role in memory management by allocating and freeing pvbufs as needed. This makes the most sense for accelerator engines in a closed environment like a Smart NIC as described in Domain Specific Accelerators.

SiPanda

SiPanda was created to rethink the network datapath and bring both flexibility and wire-speed performance at scale to networking infrastructure. The SiPanda architecture enables data center infrastructure operators and application architects to build solutions for cloud service providers to edge compute (5G) that don’t require the compromises inherent in today’s network solutions. For more information, please visit www.sipanda.io. If you want to find out more about PANDA, you can email us at panda@sipanda.io. IP described here is covered by patent USPTO 12,026,546 and other patents pending.

Kommentare